Over the last couple months I’ve been writing about Intune’s journey to become a globally scaled cloud service running on Azure. I’m treating this as Part 3 (here’s Part 1 and Part 2) of a 4-part series.

Today, I’ll explain how we were able to make such dramatic improvements to our SLA’s, scale, performance, and engineering agility.

I think the things we learned while doing this can apply to any engineering team building a cloud service.

Last time, I noted the three major things we learned during the development process:

- Every data move that copies or moves data from one location to another must have data integrity checks to make sure that the copied data is consistent with the source data. We discovered that there are a variety of efficient/intelligent ways to achieve this without requiring an excessive amount of time or memory.

- It is a very bad idea to try building your own database for these purposes (No-SQL or SQL, etc), unless you are already in the database business.

- It’s far better to over-provision than over-optimize. In our case, because we set our orange line thresholds low, we had sufficient time to react and re-architect.

After we rolled out our new architecture, we focused on evolving and optimizing our services/resources and improving agility. We came up with 4 groups of goals to evolve quickly and at high quality:

- Availability/SLAs

- Scale

- Performance

- Engineering agility

Here’s how we did it:

#1: Availability/SLAs

The overarching goal we defined for availability/SLA (strictly speaking, SLO) was to achieve 4+ 9’s for all our Intune services.

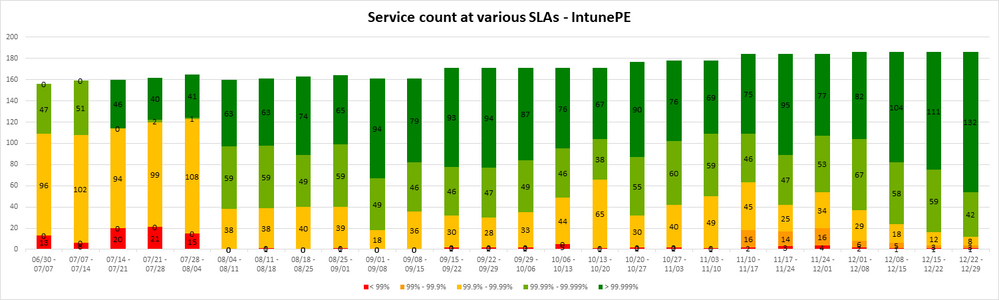

Before we started the entire process describe by this blog series, less than 25% of our services were running at 4+ 9’s, and 90% were running at 3+ 9’s.

Clearly something needed to change.

First, a carefully selected group of engineers began a systematic review of where we needed to drive SLA improvements across the 150+ services. Based on what we learned here, we saw, over the next six months, dramatic improvements. This review uncovered a variety of hidden issues and the fixes we rolled out made a huge difference. Here are a few of the big ones:

- Retries:

Our infrastructure supported a rudimentary form of retries and it needed some additional technical sophistication, specifically in terms of customized request timeouts. Initially, there was no way to cancel a request if it took more than a specified set time for a specific service. This meant that a request could never really be retried, because if a timeout happened, it most likely exceeded the threshold for the end-end operation. To address this, we added a request timeout feature that enabled services to specify custom limits on the maximum time a request can take before being canceled. This allowed services to specify appropriate time limits and give them several other retry semantics (such as backoffs, etc.) within the bounds of the overall end-end operation. This was a huge improvement and it reduced our end-end timeouts by more than half. - Circuit breakers:

It didn’t take long for us to realize that retries can cause a retry storm and result in timeouts becoming much worse. We added a circuit breaker pattern to handle this. - Caching:

We started caching responses for repeated requests that matched the same criteria without breaking security boundaries. - Threading:

During cold starts and request spikes, we noticed that the underlying framework (.NET) took time to spin off threads. To address this, we adjusted the minimum worker threads a service needs to maintain to account for these behaviors and made it configurable on a per-service basis. This almost eliminated all the timeouts that happened during these spikes and/or cold starts. - Intelligent routing:

This was a learning algorithm that determined the target service that had the best chance to succeed the request. This kind of routing avoided a hung or slow node, a deadlocked process, a slow network VM, and any other random issues experienced by the services. In a distributed cloud service operating at scale, these kinds of underlying issues must be expected and are more of a norm than an exception. This ended up being a critical feature for us to design and implement, and it made a huge difference across the board, especially when it came to reducing tail latencies. - Customized configurations:

Each of our services had slightly different requirement or behavior, and it was important for us to provide knobs to customize certain settings for optimal behavior. Examples of such customized settings included: http server queue lengths, service point count, max pending accepts, etc.

The result of all the above efforts was phenomenal. The chart below demonstrates this dramatic improvement after the changes were rolled out.

You’ll notice that we started with less than 25% of services at 4+ 9’s, and by the time we rolled out all the changes, 95% or more of our services were running at 4+ 9’s! Today, Intune maintains 4+ 9’s for over 95% of our services across all our clusters around the world.

#2: Scale

The re-architecture process enabled us to primarily use scale out of the cluster to handle our growth. It was clear that the growth we were experiencing required us to additionally optimize in scale-up improvements. The biggest workload for Intune is triggered when a device checks-in to the service in order to receive policies, settings, apps, etc. – and we chose this workload as our first target.

The scale target goal we set was 50k devices checking in within a short period (approximately 10 minutes) for a given cluster. For reference, at the time we set this goal, our scale was at 3k devices in a 10-minute window for an individual cluster – in other words our scale had to increase by about 17x.

As with the SLA work we did, a group of engineers pursued this effort and acted as a single unit to tackle the problem. Some of the issues they identified and improved included:

- Batching:

Some of the calls were made in a sequential manner and we identified a way for these calls to be batched together and sent in one request. This avoided multiple round trips and serialization/deserialization costs. - Service Instance Count:

Some of the critical services in our cluster were running with an instance count. We realized that these were the first bottlenecks that prevented us from scaling up. By simply increasing the instance count of the services without changing the node or cluster sizes we completely eliminated these bottlenecks. - Caching:

Some of the properties in an account/tenant or user were frequently accessed. These properties were accessed by various different calls to the service(s) which held this data. We realized that we can cache these properties in the token that a request carried. This eliminated the need for many calls to other services and the latencies or resource consumptions associated with them. - Reduce Calls:

We developed several ways to reduce calls from one service to another. For example, we used a Bloom Filter to determine if a change happened, and then we used that information to reduce a load of about 1 million calls to approximately 10k - Leverage SLA improvements:

We leveraged many of the improvements called out in the SLA section above, even though both efforts were operating (more or less) in parallel at the time. We also leveraged the customized configurations to experiment, learn, and test.

By the end of this exercise, we were successfully able to increase the scale from 3k devices checking-in to 70k+ device check-ins– an increase of more than 23x -- and we did this without scaling out the cluster!

#3: Performance

Our goal for performance had a very specific target: Culture change.

We wanted to ensure that our performance was continuously evaluated in production and we wanted to be able to catch performance regressions before releasing to production.

To do this, we first used Azure profiler and associated flame graphs to perform continuous profiling in production. This process showed our engineers how to drive several key improvements, and subsequently, it became a powerful daily tool for the engineers to determine bottlenecks in code, inefficiencies, high CPU usage, etc. Some of the improvements identified by the engineering team as a result of this continuous profiling include:

- Blocking Calls:

Some of the calls made from one service to another were incorrectly blocking instead of following async patterns. We fixed this by removing the blocking calls and making it asynchronous. They resulted in reduced timeouts and thread pool exhaustions. - Locking:

Another pattern we noticed using the profiler was lock contention between threads. We were clearly able to examine these via code that used the profiler’s call stacks to fix the bugs and remove the associated latencies. - High CPU:

There were numerous instances where we were easily able to catch high CPU situations using the profiles and quickly determine root causes and fixes. - Tail latency:

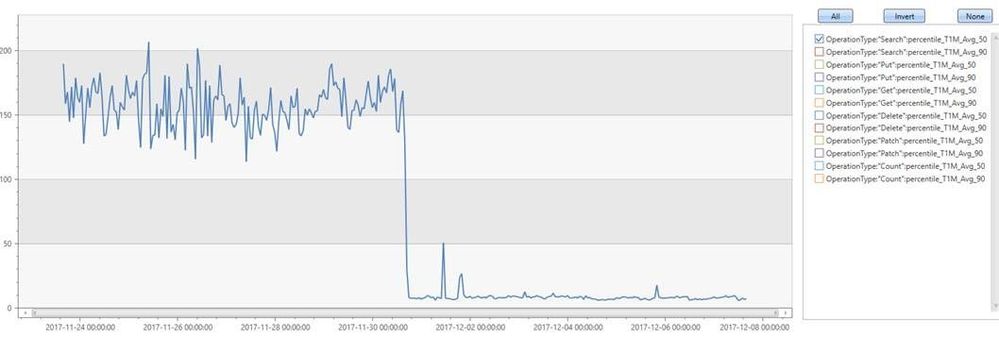

While investigating certain latencies associated with devices checking in or our portal flows, we noticed that some of the search requests were being sent across to all the partitions of a service. In many cases, there is just one partition that holds this data and the search can be performed against that single partition instead of fanning out across all of them. We successfully made optimizations to do a search directly against the partition that held the data – and the result was a drop in latency from 200 msec to less than 15 msec (see chart below). The end result was improved response times in devices checking in and faster data retrievals in our ITPro portal.

Our next action was to start a benchmark service that consistently and constantly ran high-traffic in our pre-production environments. Our goal here was to catch performance regressions. We also began running a consistent traffic load (that is equivalent to production loads) across all services in our pre-production environments. We made a practice of considering a drop in our pre-production environment as a major blocker for production releases.

Together, both of these actions become a norm in the engineering organization, and we are proud of this positive culture change in meeting the performance goal.

#4: Engineering Agility

As called out in the first post in this series, Intune is composed of many independent and decoupled Service Fabric services. The development and deployment of these services, however, are genuinely monolithic in nature. They deploy as a single unit, and all services are developed in a single large repo – essentially, a monolith. This setup was an intentional decision when we started our modern service journey because a large portion of the team was focusing on the re-architecture effort and our cloud engineering maturity was not yet fully realized. For these reasons we chose simplicity over agility. As we dramatically developed the feature investments we were making (both in terms of the number of features and the number of engineers working them), we started experiencing agility issues. The solution was decoupling the services in the monolith from development, deployment, and maintenance perspectives.

To do this we set three primary goals for improving agility:

- Building a service should complete within minutes (this was down from 7+ hrs)

- Pull requests should complete in minutes (down from 1+ day)

- Deployments to our pre-prod environments should occur several times per day (down from once or twice per week)

As indicated above, our agility was initially hurting us when it came to rapidly delivering features. Pull requests (PR) would sometimes take days to complete due to the aforementioned monolithic nature of the build environments – this meant that any change anywhere by anyone in Intune would impact everyone’s PR. On any given day, the churn was so high that it was extremely hard to get stable builds and fast builds or PRs. This, in turn, impacted our ability to deploy this massive build to our internal dogfood environments. In the best case, we were able to deploy once or twice per week. This, obviously, was not something we wanted to sustain.

We made an investment in developing and decoupling the monolithic services and improve our agility. Over a period of 2+ years, we invested two major improvements:

- Move to individual GIT repos:

Services moved from a proprietary source depot monolith branch to their own individual GIT repos. This decoupled development, PRs, unit and component tests, and builds. The change resulted in build times getting completed in around 30 minutes– a huge difference from the previous 7-8 hours or more. - Carve out of Micro Services from Monolith:

Services were carved out of the Service Fabric application and packaged into their own application, and they were turned into their own independent deployable unit. We referred to such an application as a micro service.

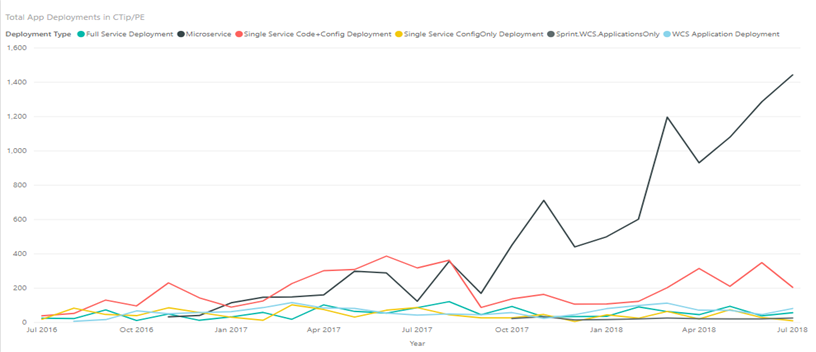

As this investment progressed and evolved, we started seeing huge benefits. The following demonstrate some of these:

- Build/PR Times:

For microservices, we reduced the time that a service typically completes a build to within 30 minutes from the previous 7+ hours. Similarly, the monolith saw an improvement to 2-3 hours from the 7 hours. A similar improvement happened in PR times as well, to a few minutes for micro services (from 1+ day). - Deployments to Pre-prod Dogfood Environments:

With the monolith, successful deployments to pre-production dogfood environments would take us minimum of 1 day and, in some extreme cases, up to a week. With the investments above, we are now able to complete several deployments per day across the monolith and micro services. This is primarily because of faster deployment times (due to the parallel deployments of micro services) and the number/volume of services that have been removed from the monolith into their own micro services.

The chart below demonstrates one such an example. The black line shows that we went from single digits to 1000’s of deployments per month in production environments. In pre-production dogfood environments, this was even higher – typically reaching 10’s of deployments per day across all the services in a single cluster.

Challenges:

Today, Intune is part monolith and part micro services. Eventually, we expect to compose 40-50 micro services from the existing monolith. There are challenges in managing micro services due to the way they are independently created and managed and we are developing tooling to address some of the micro service management issues. For example, binary dependencies between micro services is an issue because of versioning issues. To address this, we developed a dependency tool to identify conflicting or missing binary dependencies between micro services. Automation is also important if a critical fix needs to be rolled out across all micro services in order to mitigate a common library issue. Without proper tooling, it can also be very hard and time consuming to propagate the fix to all micro services. Similarly, we are developing tooling to determine all the resources required by a micro service, as well as all the resource management aspects, such as key rotation, expiration, etc.

Learnings

There were 3 learnings from this experience that are applicable to any large-scale cloud service:

- It is critically important to set realistic and achievable goals for SLA and scale – and then be persistent and diligent in driving towards achieving these goals. The best outcomes happen when a set of engineers from across the org work together as a unit towards a common goal. Once you have this in place, make incremental changes; the cumulative effect of all the small changes pays significant dividends over time.

- Continuous profiling is a critical element of cloud service performance. It helps in reducing resource consumption, tail latencies, and it indirectly benefits all runtime aspects of a service.

- Micro services help in improving agility. Proper tooling to handle patches, deployments, dependencies, and resource management are critical to deploy and operate micro services in a high-scale distributed cloud service.

Conclusion

The improvements that came about from this stage of our cloud journey have been incredibly encouraging, and we are proud of operating our services with high SLA and performance while also rapidly increasing the scale of our traffic and services.

The next stage of our evolution will be covered in Part-4 of this series: A look at our efforts to make the Intune service even more reliable and efficient by ensuring that the rollout of new features produce minimal-to-no impact to existing feature usage by customers – all while continuing to improve our engineering agility.